Last month, OpenAI CEO Sam Altman hypothesized that we could be seeing a new version of Moore’s law, where the amount of intelligence in the universe doubles every 18 months. It certainly seems that the development cycle in the field is getting shorter and shorter. Last week we published an article (ChatGPT: Your AI-powered Assistant) where we deep-dived into the background, applications, and limitations of ChatGPT. Since then, a lot of new tools have been released; the capabilities of GPT turbo, GPT4, and AutoGPT are shaking up the industry. So, naturally, we wanted to see how good is the technology when it comes to coding. If these GPT models are as capable as we think, they have the potential to drastically speed up development and shorten time to market.

With that in mind, we selected the five most general tasks and assessed to what extend GPT4 was able to suggest solutions, complete code or solve challenges. The five tasks we are exploring are:

- Create new code from scratch

- Complete parts of code

- Explain and document pieces of code

- Correct errors in code

- Generate unit tests

1. Code Generation

Creating complete working applications or parts of code is something that many influencers and netizens are boasting as one of ChatGPT’s capabilities. So, that is a perfect first use case to put to the test here. We instructed the model to act as a python developer and generate the python code for a tic-tac-toe game. This is a basic application that does not consist of any complex coding structures. The returned python code looked structured and clean, and we were able to run the code without errors. The application showed the board status and provided a prompt to ask each player to make their move. Next to that, it had created a scoring validation.

We then added a little more complexity to it by asking GPT4 to check each turn if the two players had reached a draw. That’s where we encountered the first challenge. The generated code for this additional check was incorrect. We tried explaining the required outcome differently or by adding extra pointers but to no avail. Our conclusion is that the model works for simple and small pieces of code. Complete and complex applications, however, are still challenging and we wouldn’t rely on it for deliveries.

2. Code Completion

Code completion is not new and has in fact existed for quite some time now. Most integrated development environments (IDE) come equipped with an intelligent code completion tool such as IntelliSense. In this case we tried out GitHub’s Copilot software which is a code completion tool that uses artificial intelligence to predict the next set lines of code. Unlike a tool such as IntelliSense, Copilot suggests a whole set of code such as a function based on what has been typed. Making it more flexible and easier to write quickly new chunks of simple code, whereas IntelliSense will only suggest function or variables that have already been initialized.

Based on our tests, we concluded that code completion works fine and the suggestions are often valid as long as your naming of variables and functions is clear. However, there is still a chance that some bogus suggestions are being suggested. Overall, it helps developers write code quickly and efficiently. The use of GPT-4 for this task is an improvement without it being disruptive to your work.

3. Code Explanation and Documentation

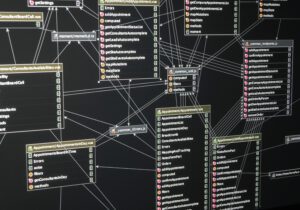

Apart from writing code, teams often need to undertake the important but often tedious task of documenting their code base. However, there are instances where there is no documentation or explanation available for what has been done. To address this issue, our team decided to explore the effectiveness of GPT-4 in explaining a code base and whether it can help in creating documentation. We began by inputting some legacy Java code and requesting GPT-4 to explain the code in simple English. Following that, we fed GPT-4 some Python code and asked it to generate documentation based on the code.

The results were promising, with GPT-4 providing a basic understanding of the code’s purpose. However, it struggles to maintain context and flow, which are crucial aspects of code comprehension. GPT-4 can be useful for newcomers seeking quick explanations of the codebase, but it lacks the ability to understand the oversight and flow of the code. Thus, the final interpretation still falls on human understanding.

4. Correcting Errors

When creating code, it’s common to introduce errors and faulty logic. Thus, it would be helpful if GPT had the ability to detect and fix mistakes in the code. To test its abilities, we fed GPT-4 an endless recursive function with a nonsensical name, ensuring that GPT-4 wasn’t influenced by the function name.

Remarkably, GPT-4 was able to solve the presented problem and provide an accurate description of why the function didn’t work, even though recursive functions are complex structures. This test demonstrated that GPT-4 can be utilized to correct errors and provide a clear understanding of what’s wrong with the function. However, we discovered that GPT-4 struggled to debug lengthy and complex functions as we increased the size and complexity of the functions.

5. Generating Unit-tests

Testing code is a crucial aspect of maintaining code quality, as it ensures that the code is correct and functional upon release. In this experiment, we provided GPT-4 with some legacy Java code and asked it to generate a Gherkin test for it. Additionally, we requested that GPT-4 generate a few unit tests for the tic-tac-toe game from the code generation example we tested earlier.

In both cases, GPT-4 performed well, accurately understanding the type and form of testing method required, provided it was clearly stated. However, when it comes to unit testing, it’s essential to provide a detailed description of what needs testing and what doesn’t. This would enable GPT-4 to generate more accurate tests.

Moreover, the model generated some unit tests that were neither useful nor correct, highlighting the importance of human supervision. While GPT-4 can assist with test generation, it’s crucial to have human oversight when selecting which tests to apply. Therefore, we view GPT-4 as a valuable tool to support these tasks, but not something we can blindly rely on.

Conclusion

Based on our experiments, we have found that GPT-4 is a highly capable model for supporting coding tasks and assisting developers. However, the outcome and quality of results may vary depending on the specific task at hand. While GPT-4 is not yet mature enough to produce production-ready and reliable code without human supervision, we were still impressed with its abilities. To summarize our findings and provide an assessment of GPT-4’s performance, we have included a table below with a rating scale of 1 to 5. Overall, we believe that GPT-4 has great potential as a tool to assist developers in various coding tasks, but it still requires human supervision to ensure the quality and accuracy of results.