The EU’s final draft of the Artificial Intelligence Act (1, 2) (AI-Act) was approved on June 14th with a majority vote by the EU Parliament. With this vote the AI-Act now enters its final phase of the legislative process. It is a seminal piece of legislation that takes a risk-based approach to regulating AI systems (3), prohibiting certain applications, while placing strict regulatory and compliance obligations on others. To name a few examples of AI-systems, think of facial recognition software, automation software, recommender systems, and even in a very broad sense, data driven systems that help you optimize operations and take better decisions based on your organizations’ goals.

In our capacity as an AI-solutions provider, we at Eraneos have been closely following the developments around this upcoming legislatory act, its risk-based approach, and how it impacts our line of work and our clients.

A particularly important development with respect to this vote is that the AI-Act has now been revised to treat so-called foundation and generative models as their own special category with specific obligations for providers of such AI models.

AI-Act in a nutshell

In response to the fast-moving developments in technology and AI, the EU has put forward the AI-Act as a regulatory framework for ensuring that AI systems placed and used within the EU are safe, respect existing laws, its citizens’ fundamental rights, and the unions’ values.

Boiled down, the approved AI-Act takes a risk-based approach to AI applications ranking them from unacceptable, high-risk, limited-risk to low-risk applications while also paying special attention to so-called generative AI systems.

Unacceptable applications – systems like real-time facial recognition, social scoring systems (based on behaviour or socio-economic status of people), predictive policing, as well as emotion recognition software for instance in law enforcement. These are all rightfully deemed to be a threat to people and very much against their fundamental rights and therefore not allowed to be marketed within the EU.

High-risk systems – systems which can negatively affect people’s safely or their rights, have to be assessed before being marketed as well as re-assessed throughout their entire lifecycle. These are divided into two broad categories:

1. AI systems that fall into 8 specific application areas:

- Biometric identification and categorisation of natural persons

- Management and operation of critical infrastructure

- Education and vocational training

- Employment, worker management and access to self-employment

- Access to and enjoyment of essential private services and public services and benefits

- Law enforcement

- Migration, asylum and border control management

- Assistance in legal interpretation and application of the law

2. AI systems used in products likes toys, cars, medical devices that have to undergo other safety assessments under the EU’s product safely rules and regulations.

Examples here include search and matching algorithms in recruiting, or things like AI systems intended to be used as safety components in the operation of road and management of traffic, or the supply of water, gas, heating and electrify to name just a few.

Finally limited-risk and low-risk systems include applications like AI enabled video and computer games, spam filters and most other types of AI systems.

Figure 1 – AI-Act compliance ratings of flagship foundation models from various providers (4)

What does the EU-Parliament approved AI-Act mean for foundation models? In short, the approved changes mean that models like GPT4 which are behind the intensified interest in applications like ChatGPT, are now considered high-risk under the AI-Act. This means that their providers,

“…. would have to assess and mitigate possible risks (to health, safety, fundamental rights, the environment, democracy and rule of law) and register their models in the EU database before their release on the EU market.” (5)

Providers of the generative AI applications built on top of foundation models (for instance Open-Ai’s ChatGPT which is built on top of GPT3.5 and GPT4) are now also required to clearly distinguish AI generated content, as well as describe the data sources used to train the models to ensure adherence to copyright laws which is undoubtedly a key area of intense debate in the near future with respect to these models. (6, 7)

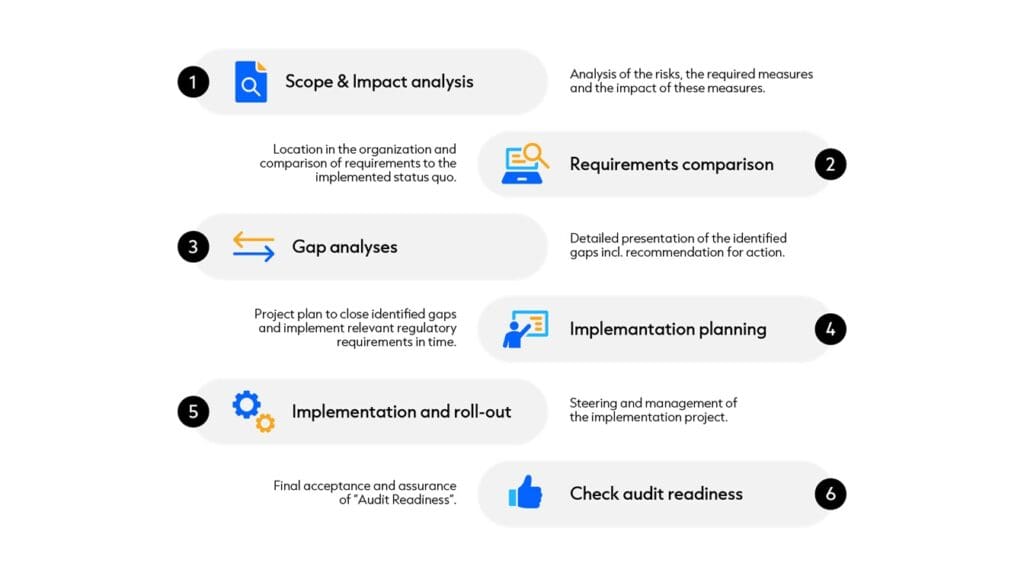

As things stand, work on assessing the various degrees of compliance with the AI-Act is being undertaken by numerous organizations, but it is already clear that certain providers are leaps ahead in this respect.

Recent work by researchers of Stanford’s Human-Centered Artificial Intelligence Lab for instance, scores BLOOM (and its provider HuggingFace) as the most compliant entity with respect to 12 categories of AI-Act requirements analysed. Figure-1, which summarizes their findings, demonstrates how far ahead BLOOM is in this respect.

At the same time, many providers score poorly when it comes to risk mitigation and the disclosure of risks. With high potential for misuse and abuse, relatively few providers disclose the mitigations they have in place, or the efficacy thereof, when it comes to concerns around the safety of foundation models and products/solutions that are derived from such models. As the researchers put it, the providers rarely measure their model’s performance under scenarios of intentional abuse. This has very important implications for cybersecurity concerns with respect to foundation models and generative AI applications and goes to show that the sector still needs more time to mature.

What this means for Eraneos and our clients

So, what are the implications for Eraneos and our clients? The answer of course depends a lot on the particular sectors, applications, and/or solutions that we are working on. But what is certainly clear is that that AI systems cannot be adapted and deployed everywhere and that we will have to view them from a risk management perspective. In other words we need to carefully consider our obligations especially when we want to build solutions or applications on top of high-risk AI systems like foundation models.

In addition to that, there are also costs to compliance that need to be considered. Impact assessment studies by the EU-Commission itself (8) estimate compliance costs of roughly 10-14% of the total development costs. Additionally:

“… the European Commission … puts compliance costs for a single AI project under the AI Act at around 10,000 euros and finds that companies can expect initial overall costs of about 30,000 euros. As companies develop professional approaches and become considered business as usual, it expects costs to fall closer to 20,000 euros.” (9)

Within the EU itself, there are concerns about disproportionate compliance costs and liability risks (10) for companies working with and developing generative AI models.

Nevertheless, we also need to be cognizant of the immaturity of the market when it comes to understanding and mitigating the risks and potential harms of foundation and generative models. Securing generative AI solutions is certainly among the biggest challenges as security researchers have repeatedly demonstrated how easily the guard-rails placed around the underlying models can be bypassed through so-called “prompt-injection” for instance. (11)

A lot of work is currently being undertaken to define, standardize, measure, and benchmark AI systems with respect to cybersecurity by the academic community, as well as governing bodies such as ENISA (12) and standardization bodies like American National Institute of Standards and Technology (NIST) to draw frameworks for better securing AI systems and dealing with their risks (13). These positive steps still require time to become fruitful.

AI is not a magic wand and while it can deliver tremendous value for public and private organizations alike, it should ideally be applied by professionals taking into consideration the various risks and impacts of AI while ensuring that ethical and legal obligations are being met.

In the meantime, our established experience in delivering complex data and AI solutions, and our deep understanding of the complexities of this landscape are our greatest assets in advising stakeholders on how to navigate the implications of the risk-based AI development approach, on how to apply AI in practice, and how to avoid pitfalls and mitigate its risks. If you are wondering how the AI-Act might affect your business or need help navigating this landscape reach out to us or one of our experts directly.

At Eraneos, we combine business understanding, technical knowledge, extensive experience, and our innovative mindset to activate your data and turn it into actual value. We are driven by achieving meaningful results and dedicated to unleashing the full potential of digital, and the full potential of Data & AI. We advise on, design, build, and deliver Data & AI solutions.

Reference list

- The AI Act (https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A52021PC0206)

- Annexes to the AI-Act (https://eur-lex.europa.eu/resource.html?uri=cellar:e0649735-a372-11eb-9585-01aa75ed71a1.0001.02/DOC_2&format=PDF)

- The exact definition of what constitutes an AI System is still being debated and finalized through the trilogue negotiations process of the EU. The AI-Act broadly refers to “software that is developed with one or more of the techniques and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with” as AI systems.

- Rishi Bommasani and Kevin Klyman and Daniel Zhang and Percy Liang, “Do Foundation Model Providers Comply with the Draft EU AI Act?” (https://crfm.stanford.edu/2023/06/15/eu-ai-act.html)

- “MEPs ready to negotiate first-ever rules for safe and transparent AI”, European Parlimiament Press Release (https://www.europarl.europa.eu/news/en/press-room/20230609IPR96212/meps-ready-to-negotiate-first-ever-rules-for-safe-and-transparent-ai)

- Lois Beckett and Kari Paul, “‘Bargaining for our very existence’: why the battle over AI is being fought in Hollywood”. The Guadrian, July 22 2023. (https://www.theguardian.com/technology/2023/jul/22/sag-aftra-wga-strike-artificial-intelligence)

- Emma Roth, “Hollywood’s writers and actors are on strike”. The Verge, Jul 25, 2023. (https://www.theverge.com/2023/7/17/23798246/strike-hollywoods-writers-actors-wga-sag-aftra)

- Renda, Andrea et al. “Study to support an impact assessment of regulatory requirements for Artificial Intelligence in Europe”. 2021. DOI: 10.2759/523404 (https://op.europa.eu/en/publication-detail/-/publication/55538b70-a638-11eb-9585-01aa75ed71a1)

- Khari Johnson, “The Fight to Define When AI Is ‘High Risk’”. Wired 1 Sep 2021. (https://www.wired.com/story/fight-to-define-when-ai-is-high-risk/)

- JAvier Espinoza, “European companies sound alarm over draft AI law”. Financial Times, June 30 2023. (https://www.ft.com/content/9b72a5f4-a6d8-41aa-95b8-c75f0bc92465)

- Kai Greshake, “Indirect Prompt Injection Threats”. (https://greshake.github.io/)

- European Union Agency for Cybersecurity (ENISA). Multi-Layer AI Security Framework for Good Cybersecurity Pracitces for AI. June 07 2023. (https://www.enisa.europa.eu/publications/multilayer-framework-for-good-cybersecurity-practices-for-ai)

- National Insistitue for Standards (NIST), “AI Risk Management Framework” (https://airc.nist.gov/AI_RMF_Knowledge_Base/AI_RMF)